NEWS

Moderation Matters: How does content moderation work?

Ever wonder how social media platforms make content moderation decisions?

David Jurgens reveals the process for how it works: policies, penalties, and detection.

In our Moderation Matters videos, we explore important concepts related to content moder... read more...

Moderation Matters: Why is social media content moderated?

Content moderation happens on social media platforms every day. Why do they do it?

Paul Resnick explains why content on social media may be moderated and why it may lead to better experiences.

In our Moderation Matters videos, we explore important co... read more...

Nature News: Deepfakes, trolls and cybertroopers: how social media could sway elections in 2024

Paul Resnick has the (literal) last word in a Nature News story about how social media could sway elections around the world in 2024 and how researchers are responding to challenging changes in the landscape.

Read the article from Nature News... read more...

Assessment of Discoverability Metrics for Harmful Content

Many stakeholders are interested in tracking prevalence metrics of harmful content on social media platforms. TrustLab tracks several metrics and has produced a report for the European Commission's Code of Practice. One of TrustLab's prevalence metrics, wh... read more...

Wired: The New Era of Social Media Looks as Bad for Privacy as the Last One

Since Twitter began its “slow-motion implosion,” a slew of new social media platforms has cropped up, including Bluesky, Mastodon, and Threads. This is good news for users who still want to connect, but bad news for their privacy.

A new story from Wire... read more...

The Shapes of the Fourth Estate During the Pandemic: Profiling COVID-19 News Consumption in Eight Countries

News media is often referred to as the Fourth Estate, a recognition of its political power. New understandings of how media shape political beliefs and influence collective behaviors are urgently needed in an era when public opinion polls do not necessaril... read more...

Remove, Reduce, Inform: What Actions do People Want Social Media Platforms to Take on Potentially Misleading Content?

To reduce the spread of misinformation, social media platforms may take enforcement actions against offending content, such as adding informational warning labels, reducing distribution, or removing content entirely. However, both their actions and their i... read more...

GuesSync!: An Online Casual Game To Reduce Affective Polarization

The past decade in the US has been one of the most politically polarizing in recent memory. Ordinary Democrats and Republicans fundamentally dislike and distrust each other, even when they agree on policy issues. This increase in hostility towards opposing... read more...

How We Define Harm Impacts Data Annotations: Explaining How Annotators Distinguish Hateful, Offensive, and Toxic Comments

Computational social science research has made advances in machine learning and natural language processing that support content moderators in detecting harmful content. These advances often rely on training datasets annotated by crowdworkers for harmful c... read more...

When Do Annotator Demographics Matter? Measuring the Influence of Annotator Demographics with the POPQUORN Dataset

Annotators are not fungible. Their demographics, life experiences, and backgrounds all contribute to how they label data. However, NLP has only recently considered how annotator identity might influence their decisions. Here, we present POPQUORN (the Potat... read more...

Profile Update: The Effects of Identity Disclosure on Network Connections and Language

Our social identities determine how we interact and engage with the world surrounding us. In online settings, individuals can make these identities explicit by including them in their public biography, possibly signaling a change to what is important to th... read more...

How to Train Your YouTube Recommender to Avoid Unwanted Videos

YouTube provides features for users to indicate disinterest when presented with unwanted recommendations, such as the "Not interested" and "Don't recommend channel" buttons. These buttons are purported to allow the user to correct "mistakes" made by the re... read more...

Bursts of contemporaneous publication among high- and low-credibility online information providers

In studies of misinformation, the distinction between high- and low-credibility publishers is fundamental. However, there is much that we do not know about the relationship between the subject matter and timing of content produced by the two types of publi... read more...

Prevalence Estimation in Social Media Using Black Box Classifiers

Many problems in computational social science require estimating the proportion of items with a particular property. This counting task is called prevalence estimation or quantification. Frequently, researchers have a pre-trained classifier available to th... read more...

Bridging Nations: Quantifying the Role of Multilinguals in Communication on Social Media

Social media enables the rapid spread of many kinds of information, from pop culture memes to social movements. However, little is known about how information crosses linguistic boundaries. We apply causal inference techniques on the European Twitter netwo... read more...

Searching for or reviewing evidence improves crowdworkers’ misinformation judgments and reduces partisan bias

Can crowd workers be trusted to judge whether news-like articles circulating on the Internet are misleading, or does partisanship and inexperience get in the way? And can the task be structured in a way that reduces partisanship? We assembled pools of both... read more...

Wisdom of Two Crowds: Misinformation Moderation on Reddit and How to Improve this Process---A Case Study of COVID-19

Past work has explored various ways for online platforms to leverage crowd wisdom for misinformation detection and moderation. Yet, platforms often relegate governance to their communities, and limited research has been done from the perspective of these c... read more...

Paul Resnick files amicus brief for Gonzalez v. Google Supreme Court case

A Supreme Court case could alter algorithm recommendation systems on websites like YouTube, challenging a 1993 law. The law concerns section 230 of the Communications Decency Act, which protects websites from liability arising from user content.

In an ... read more...

CSMR's WiseDex team completes Phase 1 of the NSF Convergence Accelerator

Social media companies have policies against harmful misinformation. Unfortunately, enforcement is uneven, especially for non-English content. WiseDex harnesses the wisdom of crowds and AI techniques to help flag more posts. The result is more comprehensiv... read more...

Nazanin Andalibi to give keynote speech at Reddit’s Mod Summit

Nazanin Andalibi will be the keynote speaker at the Third Annual Reddit Mod Summit on September 17, 2022. The virtual event brings together moderators on the popular social media site Reddit to discuss what’s new on the platform and interact with each othe... read more...

Washington Post: Facebook bans hate speech but still makes money from white supremacists

The Washington Post reports that despite Facebook’s long-time ban on white nationalism, the platform still hosts 119 Facebook pages and 20 Facebook groups associated with white supremacy organizations. Libby Hemphill said these groups have changed their ap... read more...

Pennsylvania gubernatorial nominee courting voters on Gab pushes the GOP further right, experts say

Libby Hemphill tells the Pennsylvania Capital-Star that the Pennsylvania Republican gubernatorial nominee’s use of the far-right social media platform Gab is pushing the Republican party further to the right.

Hemphill explains that Gab emerged as a refu... read more...

Meet CSMR's WiseDex team at the NSF Convergence Accelerator Expo 2022

Social media companies have policies against harmful misinformation. Unfortunately, enforcement is uneven, especially for non-English content. WiseDex harnesses the wisdom of crowds and AI techniques to help flag more posts. The result is more comprehensiv... read more...

New website provides information for marginalized communities after social media bans

An interdisciplinary team has launched a new online resource for understanding and dealing with social media content bans. The Online Identity Help Center (OIHC), the brainchild of Oliver Haimson, aims to help marginalized people navigate the gray areas in... read more...

KQED: Sarita Schoenebeck on what a healthy online commons would look like

A podcast episode from KQED (California) on reimagining the future of digital public spaces features Sarita Schoenebeck, who shares some ways in which content moderation on social media platforms can become more effective through the use of shared communit... read more...

David Jurgens receives NSF CAREER award

David Jurgens has been awarded a five-year, $581,433 grant from the National Science Foundation. The NSF CAREER award will fund his research on "Fostering Prosocial Behavior and Well-Being in Online Communities," which focuses on identifying and measuring ... read more...

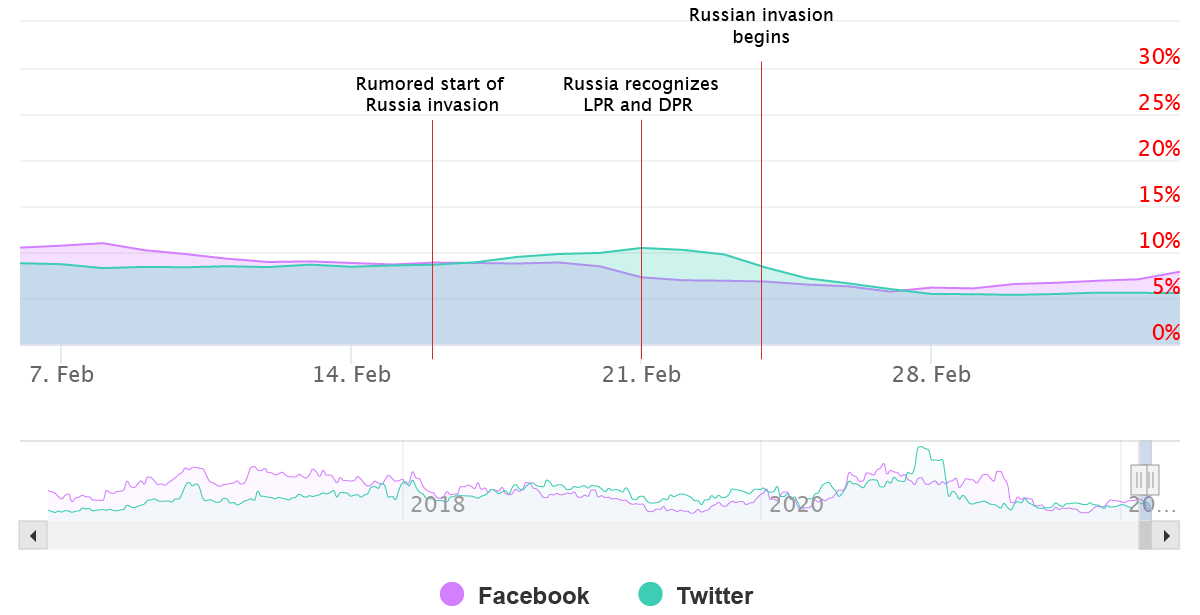

As Russia invades Ukraine, social media users “fly to quality”

A swift drop late last month in social media engagement with content from "Iffy" sources has prompted CSMR researchers to ask whether Facebook and Twitter users have been experiencing a "flight to news quality," or at least a flight away from less trustwor... read more...

CSMR awarded NSF Convergence Accelerator grant

The National Science Foundation (NSF) Convergence Accelerator, a new program designed to address national-scale societal challenges, has awarded $750,000 to a multidisciplinary team of researchers led by CSMR experts.

The project, “Misinformation Judgme... read more...

Paul Resnick featured in upcoming webinar on misinformation

Paul Resnick will be a featured guest in an upcoming webinar hosted by NewsWhip on Friday, August 13, 2021, at 11:30 AM EDT.

The webinar, "Misinformation 2021: Trends in public engagement," will explore the challenges of misinformation and data-informed... read more...

Cross-Partisan Discussions on YouTube: Conservatives Talk to Liberals but Liberals Don’t Talk to Conservatives

We present the first large-scale measurement study of cross-partisan discussions between liberals and conservatives on YouTube, based on a dataset of 274,241 political videos from 973 channels of US partisan media and 134M comments from 9.3M users over eig... read more...

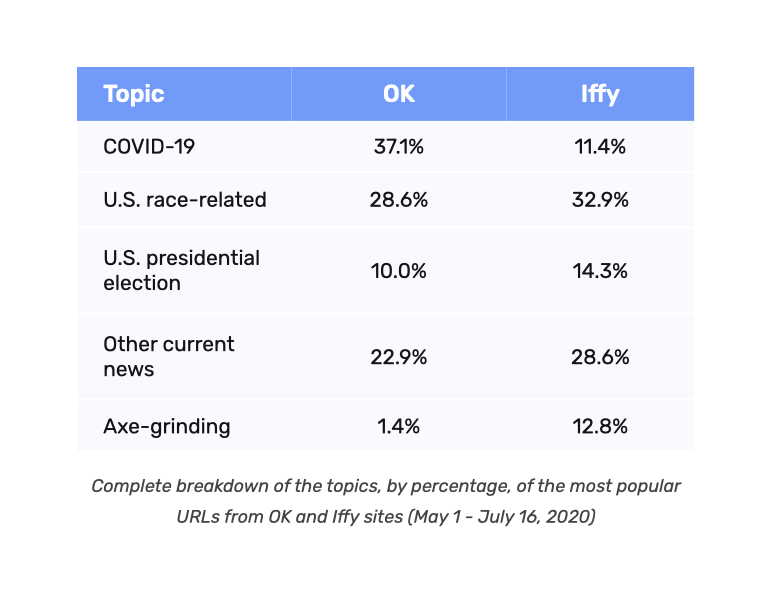

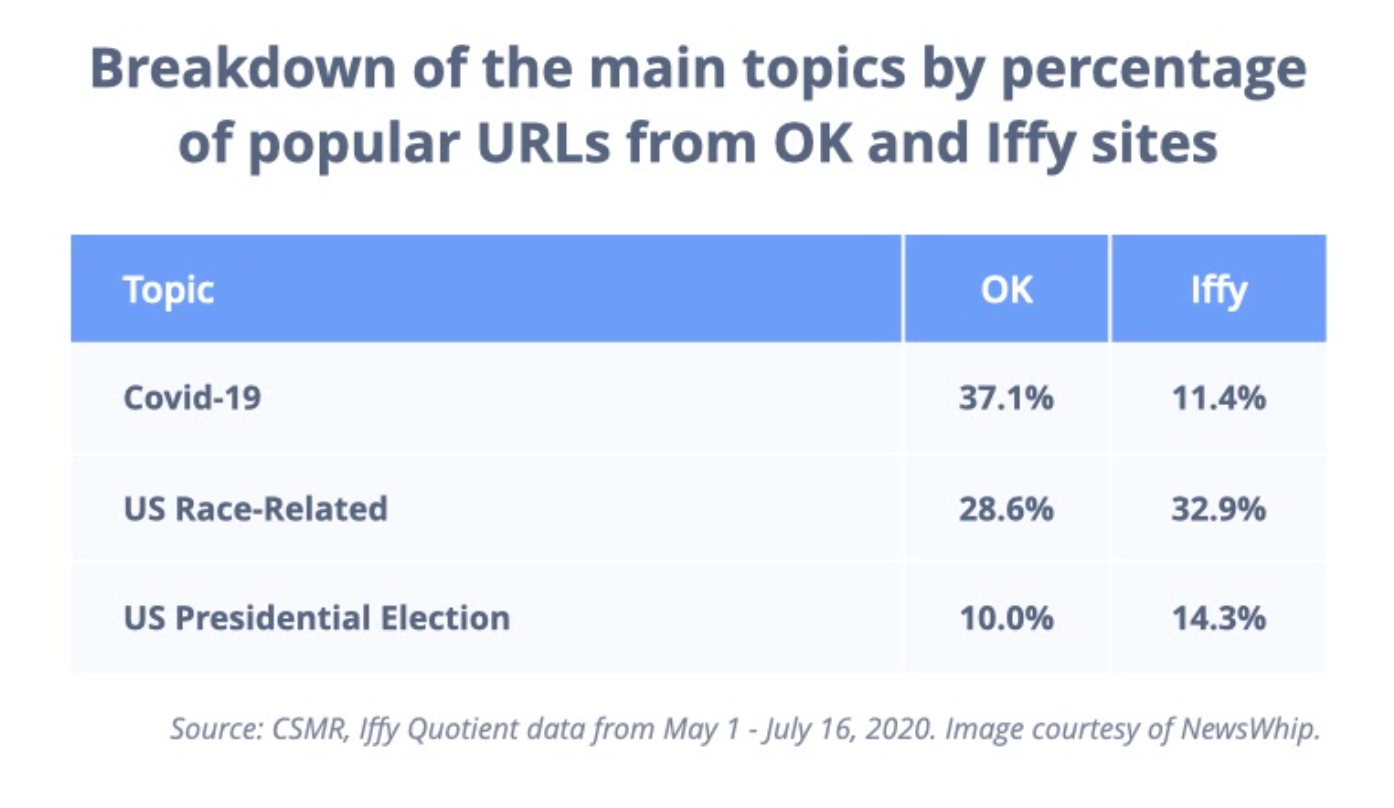

Need to settle old scores shows up in iffy social media content during pandemic, election

If you are among the Facebook and Twitter users who thought posts you read during the heart of the pandemic and election featured stories that seemed conspiracy laden, politically one-sided, and just flat-out antagonistic, you might have been onto somethin... read more...

Social media bans, restrictions have mixed impact on potential misinformation following Capitol riot

Following the wave of social media account suspensions and shutdowns, including former president Donald Trump’s, after the storming of the U.S. Capitol on January 6, the Iffy Quotient has shown different results on Facebook and Twitter.

Between January ... read more...

Center for Social Media Responsibility names Hemphill to leadership role

CSMR is pleased to announce the appointment of Libby Hemphill to the position of associate director.

Libby has served on our faculty council since its inception, and she studies the democratic potential and failures of social media, as well as ways to f... read more...

Racism, election overtake COVID-19 as "iffy" news on popular social sites

Amidst the pandemic, one might expect that the most popular news URLs from Iffy websites shared on Facebook and Twitter would frequently be about COVID-19. Upon closer inspection, though, this isn’t exactly the case.

CSMR has published Part 1 of a two-p... read more...

CSMR featured in "Disinformation, Misinformation, and Fake News" Teach-Out

CSMR's work on addressing questionable content on social media, in particular our Iffy Quotient platform health metric, has been featured in the University of Michigan's "Disinformation, Misinformation, and Fake News" Teach-Out. In a video contribution, Ja... read more...

Folha de S.Paulo: In times of uncertainty, readers seek information from known sources, says study

CSMR's research on the "flight to news quality" on social media has been covered by Folha de S.Paulo (São Paulo, Brazil).

Read the article from Folha de S.Paulo on our findings. (Please note that the article is in Portuguese.)

Read our original "flight... read more...

Christian Science Monitor: Why old-style news is new again

CSMR's research on the "flight to news quality" on social media has been covered by The Christian Science Monitor.

Read the editorial from The Christian Science Monitor on our findings.

Read our original "flight to quality" analysis.... read more...

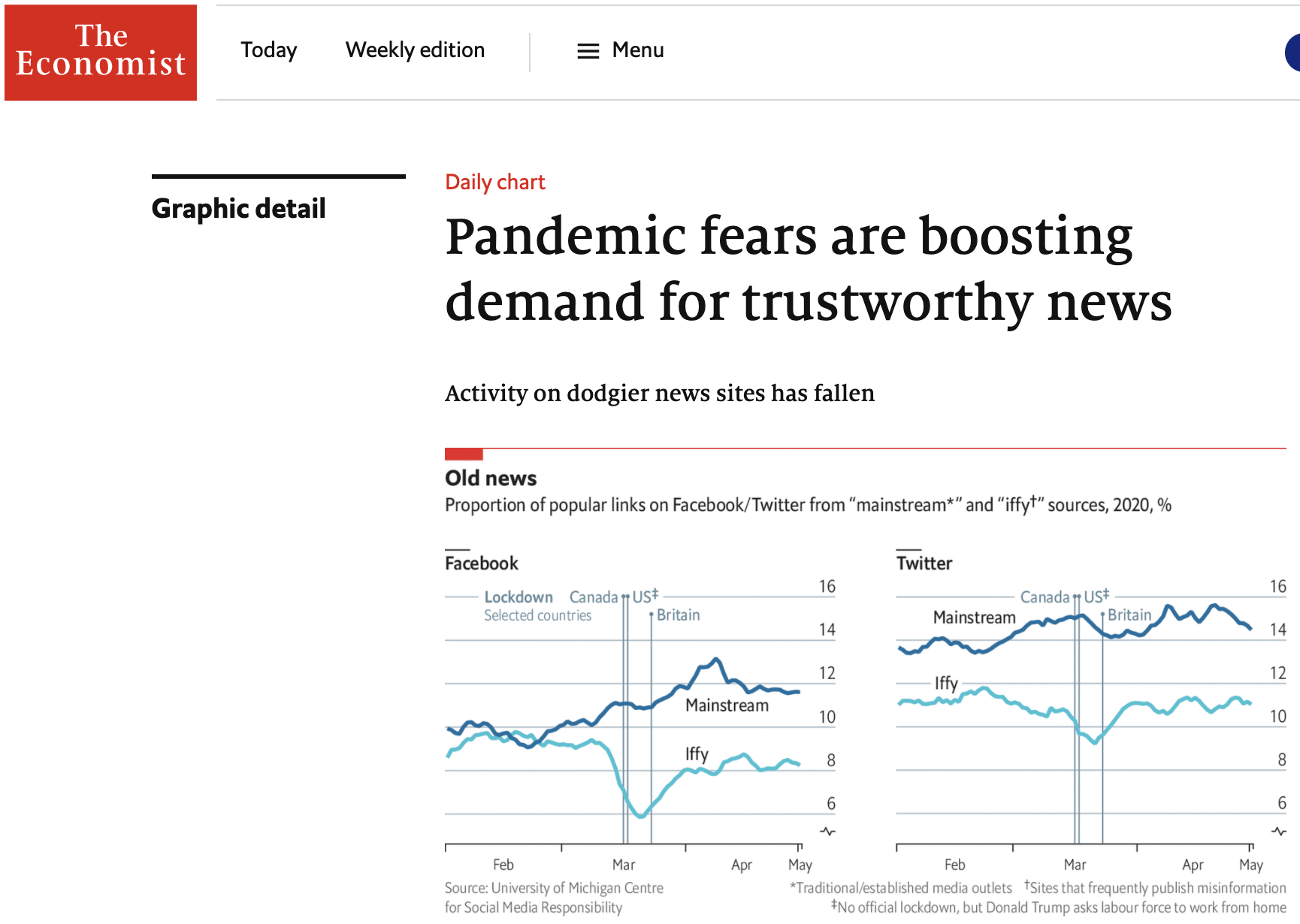

The Economist: Pandemic fears are boosting demand for trustworthy news

CSMR's research on the "flight to news quality" on social media has been covered by The Economist.

Read the daily chart article from The Economist on our findings.

Read our original "flight to quality" analysis.... read more...

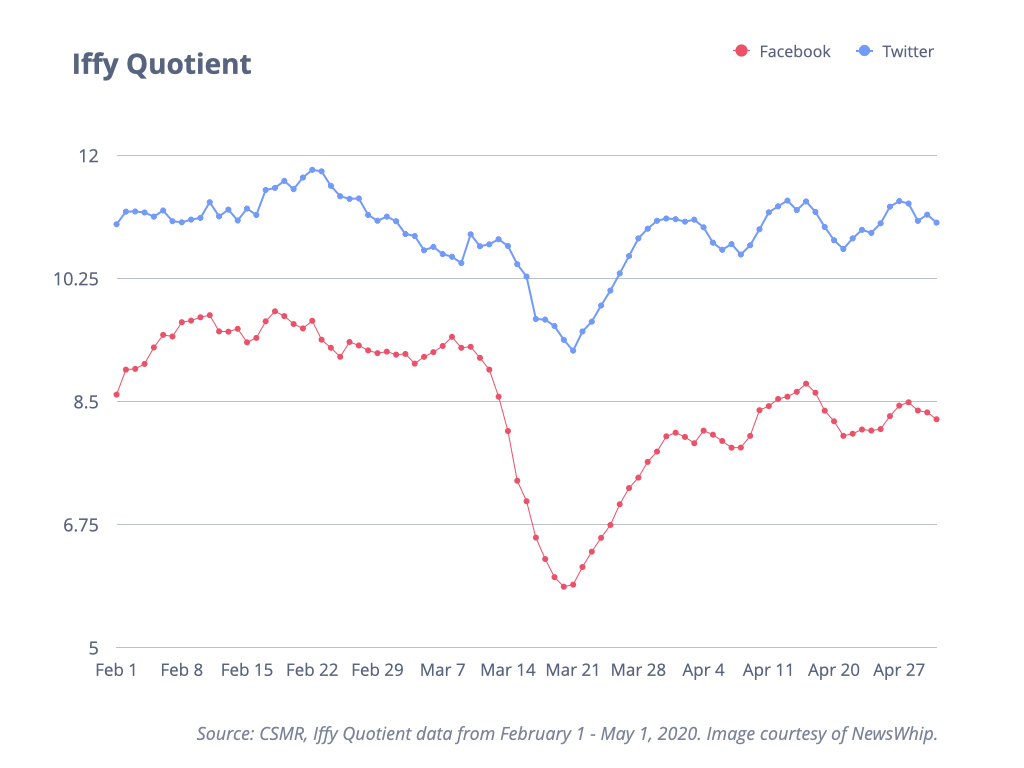

People “fly to quality” news on social sites when faced with uncertainty

When information becomes a matter of life or death or is key to navigating economic uncertainty, as it has been during the COVID-19 pandemic, it appears people turn to tried-and-true sources of information rather than iffy sites that have become a greater ... read more...

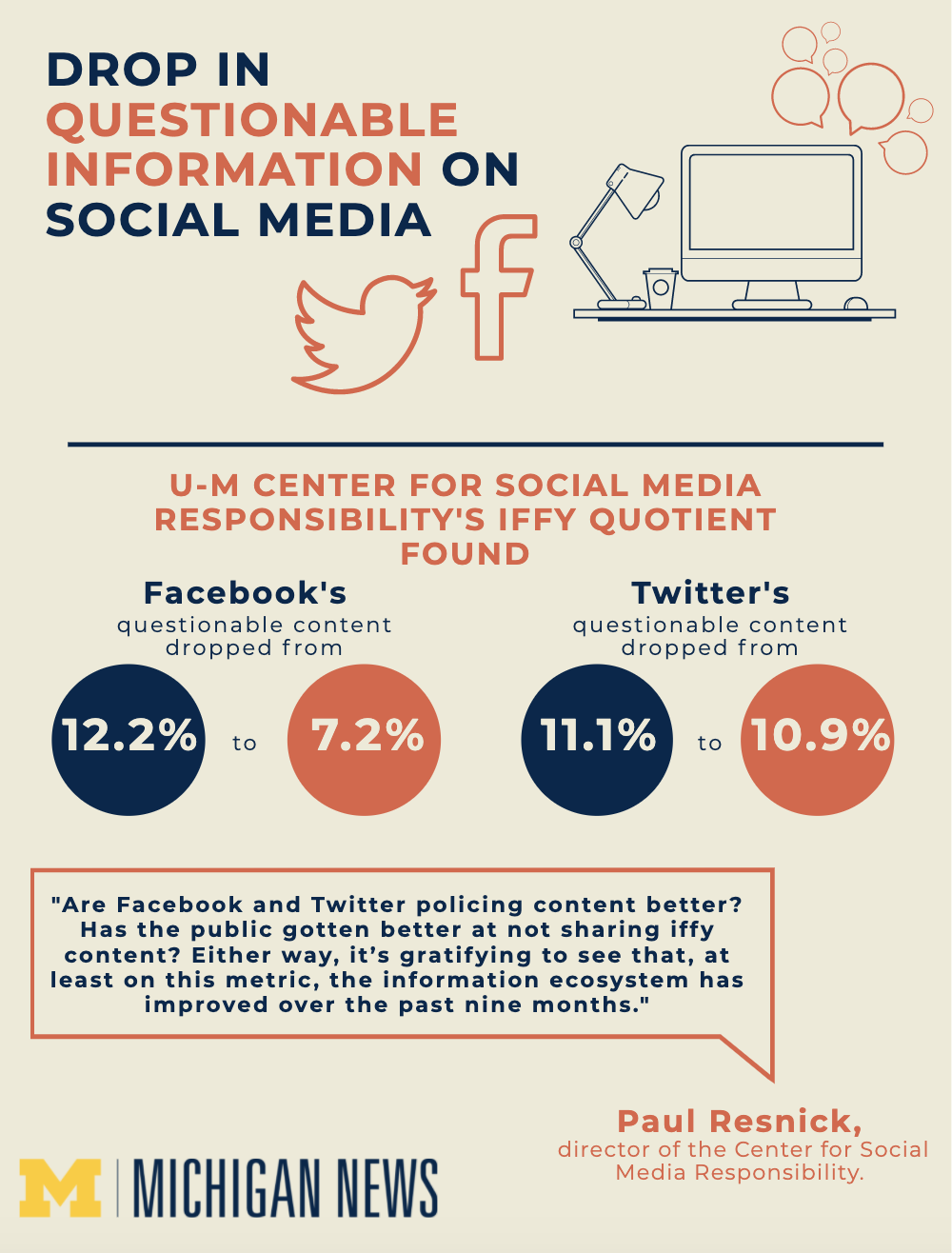

Press release: New version of Iffy Quotient shows steady drop of questionable information on social media, partners with NewsGuard for better data

A press release has been issued by Michigan News on CSMR seeing a continued decline in questionable content on Facebook and Twitter. This finding comes courtesy of the newest version of our Iffy Quotient metric, the first of our platform health metrics des... read more...

New research on best practices and policies to reduce consumer harms from algorithmic bias

On May 22, 2019, Paul Resnick was among the featured expert speakers at the Brookings Institution's Center for Technology Innovation, which hosted a discussion on algorithmic bias. This panel discussion related to the newly released Brookings paper on algo... read more...

Unlike in 2016, there was no spike in misinformation this election cycle

In a piece written for The Conversation, Paul Resnick reflects on the 2016 election cycle and the rampant misinformation amplification that took place on Facebook and Twitter during that time. He notes that in the 2018 cycle, things have looked different—a... read more...

AP: Social media’s misinformation battle: No winners, so far

With the U.S. midterm elections just a few days away, CSMR's Iffy Quotient indicates that Facebook and Twitter have been making some progress over the last two years in the fight against online misinformation and hate speech. But, as the Associated Press r... read more...

We think the Russian Trolls are still out there: attempts to identify them with ML

A CSMR team has built machine learning (ML) tools to investigate Twitter accounts potentially operated by Russian trolls actively seeking to influence American political discourse. This work is being undertaken by Eric Gilbert, David Jurgens, Libby Hemphil... read more...

The Hill: Researchers unveil tool to track disinformation on social media

The launch of CSMR's Iffy Quotient, our first platform health metric, has been covered by The Hill. Paul Resnick's comments on the Iffy Quotient's context and utility are also quoted.

Read the article from The Hill.... read more...

Paul Resnick selected for inaugural Executive Board of Wallace House

Wallace House at the University of Michigan has announced the formation of its inaugural Executive Board, which will include Paul Resnick.

Wallace House is committed to fostering excellence in journalism. It is the home to programs that recognize, susta... read more...

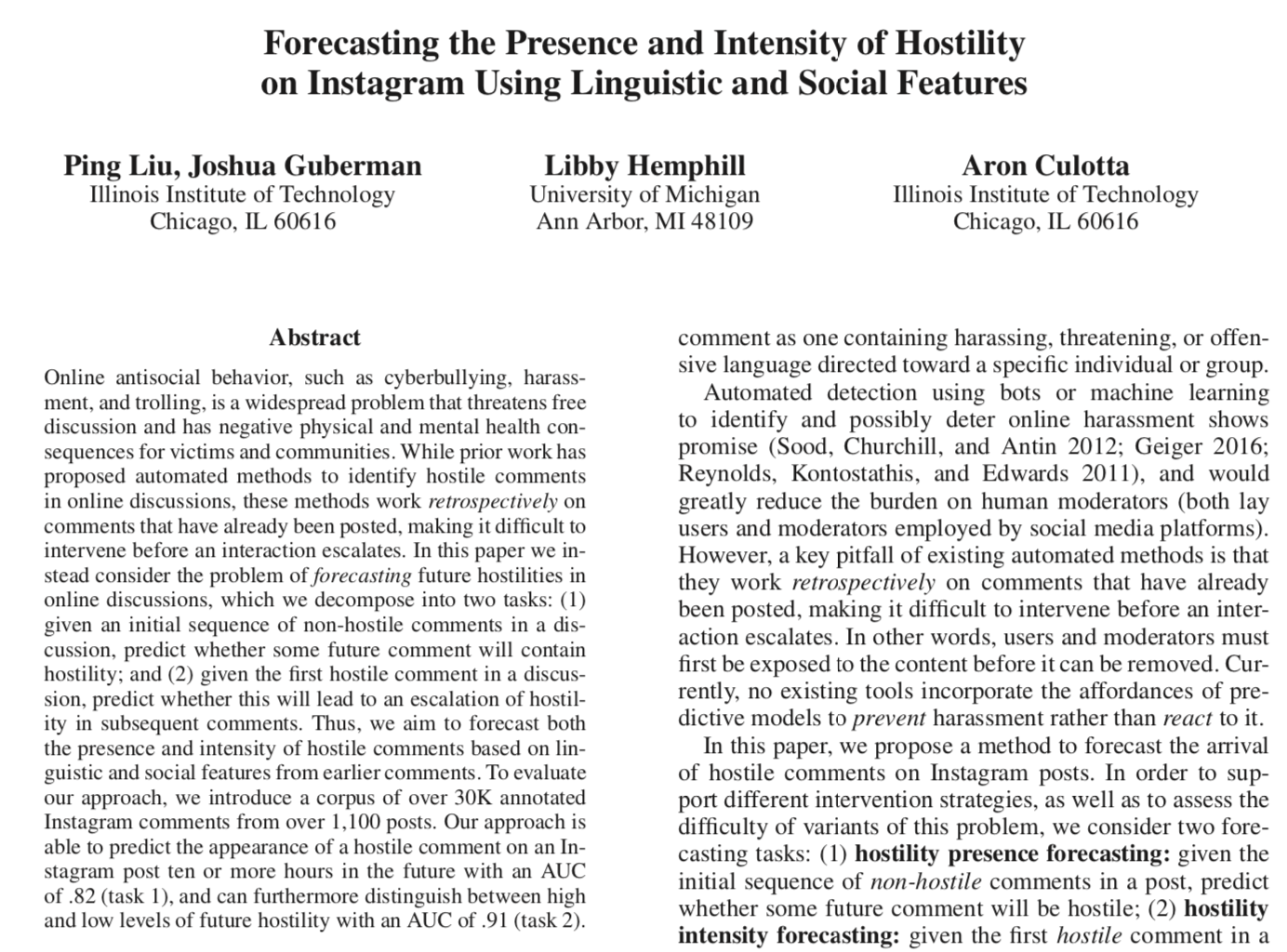

Forecasting the presence and intensity of hostility on Instagram using linguistic and social features

Libby Hemphill is a co-author of a paper investigating a possible prediction method for online hostility on Instagram.

Abstract:

Online antisocial behavior, such as cyberbullying, harassment, and trolling, is a widespread problem that threatens free di... read more...

University of Michigan experts discuss Facebook & Cambridge Analytica

University of Michigan experts weigh in on the Facebook and Cambridge Analytica controversy in the University's "Privacy, Reputation, and Identity in a Digital Age" Teach-Out. CSMR's Garlin Gilchrist and Florian Schaub join Sol Bermann, University Privacy ... read more...

Wall Street Journal: Trolls Take Over Some Official U.S. Twitter Accounts

Libby Hemphill was quoted in a Wall Street Journal article about trolls taking over some official U.S. government Twitter accounts.

Read the article from The Wall Street Journal... read more...

"Genderfluid" or "Attack Helicopter": Responsible HCI Research Practice with Non-binary Gender Variation in Online Communities

Oliver Haimson is a co-author of a paper investigating how to collect, analyze, and interpret research participants' genders with sensitivity to non-binary genders. The authors offer suggestions for responsible HCI research practices with gender variation ... read more...

Fragmented U.S. Privacy Rules Leave Large Data Loopholes for Facebook and Others

In a piece written for The Conversation—and reprinted in Scientific American—Florian Schaub comments on Facebook CEO Mark Zuckerberg’s Congressional testimony on ways to keep people’s online data private, and argues that Facebook has little reason to prote... read more...

What your kids want to tell you about social media

Sarita Schoenebeck was quoted in a HealthDay article about the effects of parents' social media use.

Read the article at Medical Xpress (via HealthDay)... read more...

Why privacy policies are falling short...

Florian Schaub shares some thoughts on the shortcomings of technology-related privacy policies in an "Insights" article written for the Trust, Transparency and Control (TTC) Labs initiative.

TTC Labs is a cross-industry effort to create innovative desig... read more...

Online Harassment and Content Moderation: The Case of Blocklists

Eric Gilbert is a co-author of a paper investigating online harassment through the practices and consequences of Twitter blocklists. Based on their research, Gilbert and his co-authors also propose strategies that social media platforms might adopt to prot... read more...

AP: Social media offers dark spaces for political campaigning

Garlin Gilchrist was quoted in an Associated Press article about the dark spaces for political campaigning found on social media.

Read the article at Business Insider (via the Associated Press)... read more...

MLive: New University of Michigan center to tackle "social media responsibility"

Garlin Gilchrist discussed the launch of the Center for Social Media Responsibility and the center's mission on MLive.

Read the article from MLive... read more...

Classification and Its Consequences for Online Harassment: Design Insights from HeartMob

A paper, authored by Lindsay Blackwell, Jill Dimond (Sassafras Tech Collective), Sarita Schoenebeck, and Cliff Lampe, about design insights from the HeartMob system will be presented at the CSCW conference in October 2018. These insights may help platform ... read more...